elasticsearch + search-guard + filebeat + metricbeat + logstash + kibana ELK整体安装配置教程_metricbeat安装

* java.security.SecurityPermission setProperty.ocsp.enable

* java.util.PropertyPermission * read,write

* java.util.PropertyPermission org.apache.xml.security.ignoreLineBreaks write

* javax.security.auth.AuthPermission doAs

* javax.security.auth.AuthPermission modifyPrivateCredentials

* javax.security.auth.kerberos.ServicePermission * accept

See http://docs.oracle.com/javase/8/docs/technotes/guides/security/permissions.html

for descriptions of what these permissions allow and the associated risks.

-> Installed search-guard-6

(6)修改elasticsearch.yml配置文件

- 1

- 2

- 3

- 4

- 5

======================== Elasticsearch Configuration =========================

———————————- Cluster ———————————–

集群的名称

cluster.name: es-cluster

———————————— Node ————————————

节点的名称

node.name: es-node-1

是否能参加主节点选举

node.master: true

是否是数据节点

node.data: true

———————————– Paths ————————————

数据的目录,此目录自行创建

path.data: /data/elk/es/data

日志目录,此目录自行创建

path.logs: /data/elk/es/logs

———————————– Memory ———————————–

设置为true锁住内存

bootstrap.memory_lock: true

———————————- Network ———————————–

监听的主机(不限请求机器)

network.host: 0.0.0.0

监听的默认端口

http.port: 9200

——————————— Discovery ———————————-

这是一个集群中的主节点的初始列表,当节点(主节点或者数据节点)启动时使用这个列表进行探测

discovery.zen.ping.unicast.hosts: [“10.253.177.35”, “10.253.177.36”, “10.253.177.37”]

主节点最少的个数,建议为:节点数/2+1

discovery.zen.minimum_master_nodes: 2

discovery.zen.ping_timeout: 30s

以下三个是前台跨域问题解决办法

http.cors.enabled: true

http.cors.allow-credentials: true

http.cors.allow-origin: “/.*/”

必须设置xpack为false

xpack.security.enabled: false

search-guard节点证书,不同的节点填写成对应的证书

searchguard.ssl.transport.pemcert_filepath: key/search-guard-certificates/node-certificates/CN=es-node-1.crtfull.pem

search-guard节点key

searchguard.ssl.transport.pemkey_filepath: key/search-guard-certificates/node-certificates/CN=es-node-1.key.pem

该密钥可在解压的证书文件夹下的README.txt里可查到

searchguard.ssl.transport.pemkey_password: 7719b9f699c993abb4d0

searchguard.ssl.transport.pemtrustedcas_filepath: key/search-guard-certificates/chain-ca.pem

searchguard.ssl.transport.enforce_hostname_verification: false

searchguard.ssl.http.enabled: true

searchguard.ssl.http.pemcert_filepath: key/search-guard-certificates/node-certificates/CN=es-node-1.crtfull.pem

searchguard.ssl.http.pemkey_filepath: key/search-guard-certificates/node-certificates/CN=es-node-1.key.pem

searchguard.ssl.http.pemkey_password: 7719b9f699c993abb4d0

searchguard.ssl.http.pemtrustedcas_filepath: key/search-guard-certificates/chain-ca.pem

searchguard.authcz.admin_dn:

- CN=sgadmin

searchguard.audit.type: internal_elasticsearch

searchguard.enable_snapshot_restore_privilege: true

searchguard.check_snapshot_restore_write_privileges: true

searchguard.restapi.roles_enabled: [“sg_all_access”]

上面的pemkey\_password可在search-guard-certificates- …… .tar.gz下的README.txt中查到

- 1

- 2

- 3

- 4

- 5

README.txt

Passwords

Common passwords

Root CA password: 495f7fc3b340008ff918413cdfca56d8d7e8a047

Truststore password: 96808e3ec8fe2c14f74f

Admin keystore and private key password: 53b895fd2d74ef87d643

Demouser keystore and private key password: f2ad809cf899194ec804

Host/Node specific passwords

Host: es-node-1

es-node-1 keystore and private key password: 7719b9f699c993abb4d0

es-node-1 keystore: node-certificates/CN=es-node-1-keystore.jks

es-node-1 PEM certificate: node-certificates/CN=es-node-1.crtfull.pem

es-node-1 PEM private key: node-certificates/CN=es-node-1.key.pem

Host: es-node-2

es-node-2 keystore and private key password: 280c91c6ac87998eec2e

es-node-2 keystore: node-certificates/CN=es-node-2-keystore.jks

es-node-2 PEM certificate: node-certificates/CN=es-node-2.crtfull.pem

es-node-2 PEM private key: node-certificates/CN=es-node-2.key.pem

Host: es-node-3

es-node-3 keystore and private key password: 48241eecae07f957b8da

es-node-3 keystore: node-certificates/CN=es-node-3-keystore.jks

es-node-3 PEM certificate: node-certificates/CN=es-node-3.crtfull.pem

es-node-3 PEM private key: node-certificates/CN=es-node-3.key.pem

(7)其余两台机器也是如上配置,修改对应的密码即可

(8)将三台机器上的es停止并重新启动(*****)

(9)设置权限因子,将sgadmin客户端证书密钥复制到插件目录下,步骤在README.txt中也有,三台机器的es依次设置,步骤一致

- 1

- 2

- 3

- 4

- 5

- 6

- 7

README.txt

On the node where you want to execute sgadmin on:

* Copy the file ‘root-ca.pem’ to the directory ‘plugins/search-guard-/tools’

* Copy the file ‘client-certificates/CN=sgadmin.crtfull.pem’ to the directory ‘plugins/search-guard-/tools’

* Copy the file ‘client-certificates/CN=sgadmin.key.pem’ to the directory ‘plugins/search-guard-/tools’

Change to the ‘plugins/search-guard-/tools’ and execute:

chmod 755 ./sgadmin.sh

./sgadmin.sh -cacert root-ca.pem -cert CN=sgadmin.crtfull.pem -key CN=sgadmin.key.pem -keypass 53b895fd2d74ef87d643 -nhnv -icl -cd …/sgconfig/

操作步骤如下:

- 1

- 2

- 3

- 4

- 5

cd /home/elk/elasticsearch/config/key/search-/local/elasticsearch/config/key/search-guard-certificates

cp root-ca.pem chain-ca.pem client-certificates/CN=sgadmin.key.pem client-certificates/CN=sgadmin.crtfull.pem …/…/…/plugins/search-guard-6/tools

cd …/…/…/plugins/search-guard-6/tools

chmod 755 sgadmin.sh

./sgadmin.sh -cacert root-ca.pem -cert CN=sgadmin.crtfull.pem -key CN=sgadmin.key.pem -keypass 00fb1075cf84c333f1cc -nhnv -icl -cd …/sgconfig/

-nhnv -icl -cd …/sgconfig/

Search Guard Admin v6

Will connect to localhost:9300 … done

Elasticsearch Version: 6.4.0

Search Guard Version: 6.4.0-23.1

Connected as CN=sgadmin

到下面这补可能会卡着,无需担心,只需要在其余两台机器执行设置权限因子操作,次步即可继续执行下去(*****)

Contacting elasticsearch cluster ‘elasticsearch’ and wait for YELLOW clusterstate …

Clustername: GHS-ELK

Clusterstate: GREEN

Number of nodes: 3

Number of data nodes: 3

searchguard index already exists, so we do not need to create one.

Populate config from /usr/local/elasticsearch/plugins/search-guard-6/sgconfig

Will update ‘sg/config’ with …/sgconfig/sg_config.yml

SUCC: Configuration for ‘config’ created or updated

Will update ‘sg/roles’ with …/sgconfig/sg_roles.yml

SUCC: Configuration for ‘roles’ created or updated

Will update ‘sg/rolesmapping’ with …/sgconfig/sg_roles_mapping.yml

SUCC: Configuration for ‘rolesmapping’ created or updated

Will update ‘sg/internalusers’ with …/sgconfig/sg_internal_users.yml

SUCC: Configuration for ‘internalusers’ created or updated

Will update ‘sg/actiongroups’ with …/sgconfig/sg_action_groups.yml

SUCC: Configuration for ‘actiongroups’ created or updated

Done with success

(10)验证,在浏览器输入其中一台es的ip:9200会提示输入账号密码,默认账号密码为admin/admin

### 2.2、kibana 安装

(1)将kibana的压缩包上传并解压

(2)进入kibana的目录下的config目录,修改kibana.yml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

启动端口

server.port: 5601

监听地址

server.host: “10.253.177.31”

server.name: “log-kibana”

从elasticsearch中找日志,因为es中使用了search-guard,所以此处是https

elasticsearch.hosts: [“https://10.253.177.35:9200”]

es的账号(必须)

elasticsearch.username: “admin”

es的密码(必须)

elasticsearch.password: “admin”

ssl验证(必须none)

elasticsearch.ssl.verificationMode: none

xpark安全(必须false)

xpack.security.enabled: false

kibana的索引

kibana.index: “.kibana”

语言

i18n.locale: “zh-CN”

(3)进入kibana的bin目录 ./kibana &启动kibana,然后再浏览器输入192.168.1.114:5601即可查看

### 2.3、metricbeat 安装

(1)将metricbeat的压缩包上传并解压

(2)进入metricbeat的目录下,修改metricbeat.yml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

metricbeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

#==================== Elasticsearch template setting ==========================

setup.template.settings:

index.number_of_shards: 3

index.codec: best_compression

#_source.enabled: false

#============================== Kibana =====================================

setup.kibana:

#================================ Outputs =====================================

#————————– Elasticsearch output ——————————

output.elasticsearch:

hosts: [“10.253.177.35”, “10.253.177.36”, “10.253.177.37”]

下面4个必须,因为es使用了search-guard

protocol: “https”

username: “admin”

password: “admin”

ssl.verification_mode: “none”

#================================ Processors =====================================

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

(3)在metricbeat的目录下执行./metricbeat -e -c metricbeat.yml &启动metricbeat

(4)在每台需要安装metricbeat的机器上执行如上步骤

### 2.4、filebeat 安装

(1)将filebeat的压缩包上传并解压

(2)进入filebeat的目录下,修改filebeat.yml

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

#=========================== Filebeat inputs =============================

filebeat.inputs:

- type: log

enabled: true

此处可以写多个路劲

paths:

- /opt/kettle/log//.log

tags是内容是自定义的

tags: [“kettle-log”]

fields为自定义字段

fields:

application-name: kettle应用

log-type: kettle日志

log-server: 192.168.1.101

log-conf-name: *.log自定义字段生效此处需设为true

fields_under_root: true

日志需包含的内容

include_lines: [‘ERROR’,‘PANIC’,‘FATAL’,‘WARNING’]

对于一条日志以多行存在的处理 multiline.pattern后面跟着正则表达式

multiline.pattern: ‘Logging is at level’

true 或 false,默认是false,匹配pattern的行合并到上一行;true,不匹配pattern的行合并到上一行

multiline.negate: true

after 或 before,合并到上一行的末尾或开头

multiline.match: after

multiline.flush_pattern: ‘Processing ended’

#============================= Filebeat modules ===============================

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

#==================== Elasticsearch template setting ==========================

setup.template.settings:

index.number_of_shards: 3

#================================ Outputs =====================================

#—————————– Logstash output ——————————–

output.logstash:

hosts: [“192.168.1.114:5044”]

#================================ Processors =====================================

processors:- add_host_metadata: ~

- add_cloud_metadata: ~

(3)在filebeat的目录下./filebeat &启动filebeat

### 2.5、logstash 安装(*****)

(1)将logstash的压缩包上传并解压

(2)进入filebeat的config目录下,新建filebeat.conf文件

(3)编辑filebeat.conf文件

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

input {

beats {

port => 5044

}

}

filter {

if “kettle-10.253.177.27” in [tags]{

grok {

pattern_definitions => {

# 自定义的正则解析

“CUSTOM_TIME” => “20%{YEAR}/%{MONTHNUM}/%{MONTHDAY} %{HOUR}:?%{MINUTE}(?::?%{SECOND})”

}

match => {

# 将日志拆成两个字段

“message” => “%{CUSTOM_TIME:time}s*-s*%{GREEDYDATA:message}”

}

# 覆盖原有的message字段

overwrite => [“message”]

}

mutate {

# 删除自带的字段@version

remove_field => [“@version”]

}

}

………………………………

- 1

- 2

}

output {

if “kettle-10.253.177.27” in [tags]{

elasticsearch{

# 以下6个必填,因为es配置的search-guard,密码在search-guard的README.txt中可找到

user => “admin”

password => “admin”

# 是否开启ssl,我们要使用https,必须设为true

ssl => true

# ssl_certificate_verification 这个参数设置我们想像kinana那样设置成fasle是不行的,还是会报错,也就是必须设置成true,这样一来下面的truststore和truststore_password 必须配置(ps:这里我去作者github上看过issue了,没有解决办法,而且作者在源码中的注视中已经说明这个参数一直不正常,所以遗弃。)

ssl_certificate_verification => true

truststore => “/home/elk/logstash/logstash-6.6.1/config/key/truststore.jks”

truststore_password => “96808e3ec8fe2c14f74f”

hosts => ["es-node-1:9200", "es-node-2:9200", "es-node-3:9200"]

# 日志在es中的index

index => "kettle-log"

}

}

………………………………

- 1

- 2

- 3

- 4

- 5

- 6

}

(4)truststore 和truststore\_password 如何配置

truststore:这里我们还是先使用es下的这个truststore.jks,从es的机器拷贝过来。

truststore\_password:这个密码在search-guard中的README.txt可找到。

到此还有最关键一步,连接的hosts => [“es-node-1:9200”, “es-node-2:9200”, “es-node-3:9200”],因为官方授信文件中指定的是es的nodename(README.txt中可看出),所以此处就必须这么配,这就需要我们再/etc/hosts中配置三个es的nodename的正确地址。

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

vi /etc/hosts

192.168.1.111 es-node-1

192.168.1.112 es-node-2

192.168.1.113 es-node-3

(6)进入logstash的bin目录./logstash -f …/config/filebeat.conf &启动logstash

### 2.6、ELK的启动顺序

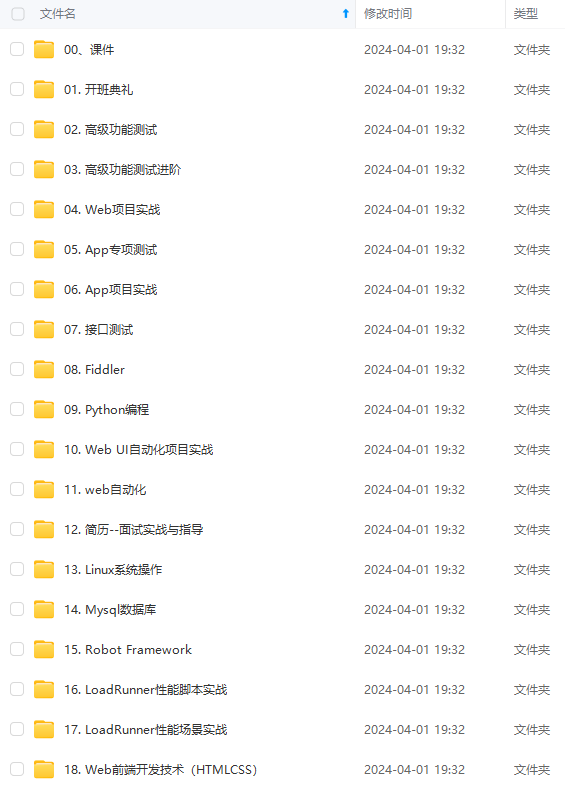

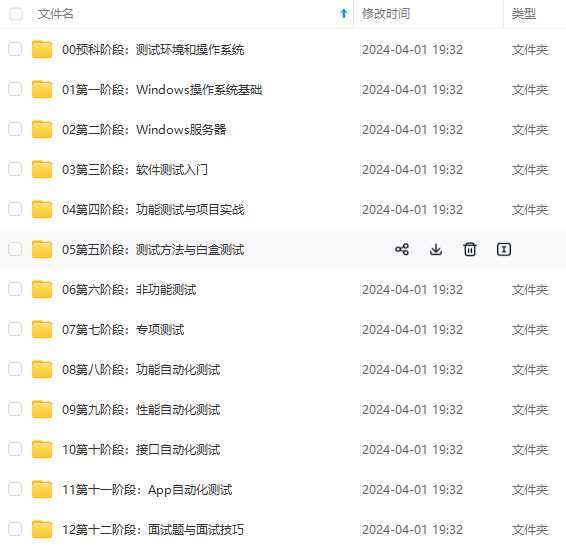

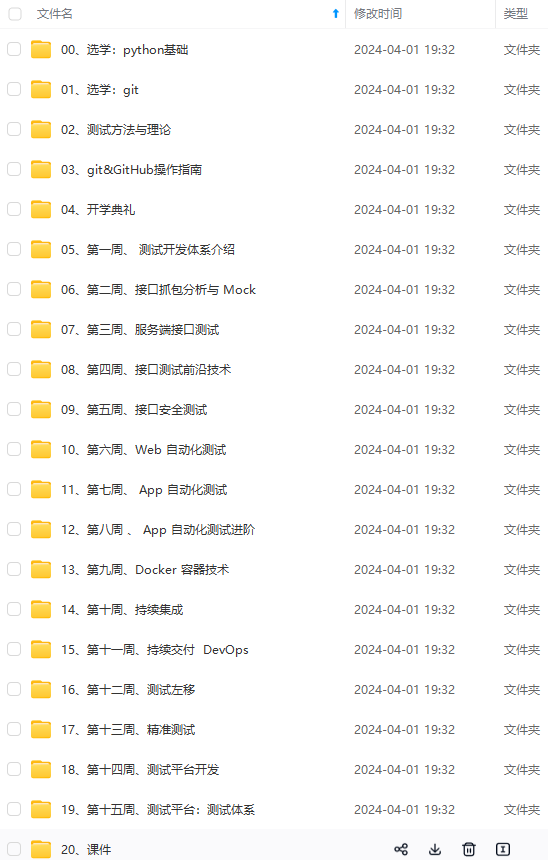

**既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!**

参考:docs.qq.com/doc/DSlVlZExWQ0FRSE9H

de-1

192.168.1.112 es-node-2

192.168.1.113 es-node-3

- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

(6)进入logstash的bin目录./logstash -f …/config/filebeat.conf &启动logstash

2.6、ELK的启动顺序

[外链图片转存中…(img-Jfv2J7HF-1724716385134)]

[外链图片转存中…(img-ADfrmWfa-1724716385135)]

[外链图片转存中…(img-pHeaKeRY-1724716385136)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,涵盖了95%以上软件测试知识点,真正体系化!

参考:docs.qq.com/doc/DSlVlZExWQ0FRSE9H

相关文章